Bayesian Brain Hypothesis

Continuing on to another fascinating theory in the field of Neuroscience, Bayesian Brain Hypothesis. As we all are aware of the fact Bayesian Statistics in the Probabilistic methodology to update belief with evidence using conditional probability on your priors. It shouldn’t be a Brainer to expect a theory extrapolating the Bayesian belief system to our cognitive system.For those who need a background on what is Bayesian Statistics, please refer this link .So let’s get into the Bayesian Hypothesis and understand what exactly, it has to offer us.

It formulate perception as a constructive process based on internal or generative models.It states there is an internal model within the brain which tries to optimise the sensory inputs. It is analogous to analysis by synthesis. Analysis by synthesis refers to the methodology where a signal is fed to a model and the model performs some characteristic transformation to understand the reproducible of the signal from its end. It is an optimisation problem w.r.t to reproducebility of the signal independent to the signal fed.

So central to this hypothesis is a probabilistic model that can generate predicitions, against which sensory samples are tested to update the beliefs about their causes. to formulate this in terms of the Bayes theorem formula.

Understanding the Model

Model can be decomposed into P(likelihood) = P(sensory data | causes), P(prior) = P(causes), P(evidence) = P(sensory data) and P(Posterior) = P(causes | sensory data). To understand the above model’s decomposition, Let’s say I receive a sensory input to the optical nerve which is the wavelength of light bouncing of the surface of an object namely “cat”. Here the sensory data is light entering the retina which is caused by a cat. So given a cat the probability of sensory data being wavelength becomes the likelihood probability i.e. how likely is it that object will generate a kind of sensation to the nervous system. Prior as mentioned is the objects we recognise. Evidence to the brain is the sensation generated inside the brain while we look at the cat and posterior now is given a sensation inside the brain, what could be the source of causing it. It is like blind folding you and giving you a neural shock analogous to the stimulant which generate image of a cat in your brain and then I ask you, what do you think that object is.

So while going working on my exploration of the Bayesian Brain Hypothesis, I came across this gem of a statement which I never thought of considering and contemplating before ever.

One criticism of Bayesian treatments is that they ignore the question of how prior beliefs, which are necessary for inference, are formed.

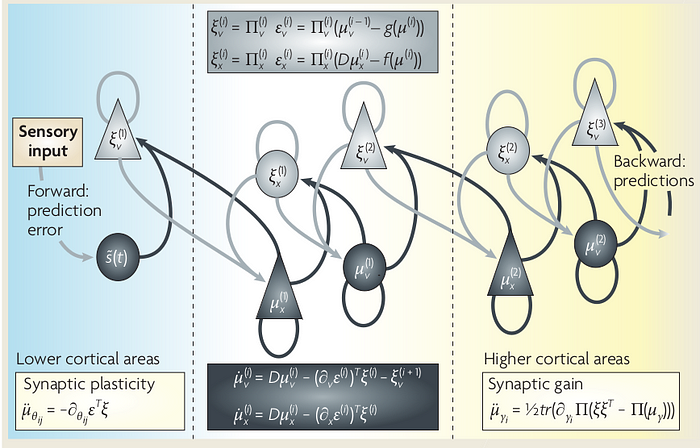

This above statement in itself can become a field of research to work on. Considering the priors, how those priors are formulated matters alot too. “Bayhm”(explosive sound), let’s get back on track and not get driven by the feelings here. There are two major issues that Bayesian Brain Hypothesis accounts for 1) how generative model manifests the brain which is explained by hierarchical generative models. 2) recognition density(refer to previous blog for the it) is encoded by physical attributes of the brain such as synaptic activity, efficacy and gain.

Hierarchical generative models

causes in hierarchical models of sensory input.

Above diagram shows the putative cells of origin of forward Neuroscience

driving connections that convey prediction error (grey arrows) Nature

from a Reviews lower area(for example, the lateral geniculate nucleus) to a higher area, and nonlinear backward connections (black arrows) that construct predictions.In this scheme, the sources of forward and

backward connections are superficial and deep pyramidal cells (upper and lower triangles),respectively, where state units are black and error units are grey.

Generative model’s mathematical expression

The brain has to explain complicated dynamics on continuous states with hierarchical or deep causal structure and may use models with the following form.

Here, g (i) and f (i) are continuous nonlinear functions of (hidden and causal) states, with parameters θ (i) .The random fluctuations z(t)(i) and w(t)(i) play the part of observation noise at the sensory level and state noise at higher levels. Causal states v(t)(i) link hierarchical levels, where the output of one level provides input to the next. Hidden states x(t)(i) link dynamics over time and endow the model with memory. Gaussian assumptions about the random fluctuations specify the likelihood and Gaussian assumptions about state noise furnish empirical priors in terms of predicted motion. These assumptions are encoded by their precision (or inverse variance), П (i)(γ), which are functions of precision parameters γ.

Recognition dynamics and prediction error

If we assume that neuronal activity encodes the conditional expectation of states, then recognition can be formulated as a gradient descent on free energy.Under hierarchical models, error units receive messages from the state units in the same level and the level above, whereas state units are driven by error units in the same level and the level below. These provide bottom-up

messages that drive conditional expectations μ (i) towards better predictions, which explain away prediction error. These top-down predictions correspond to g(μ (i) ) and f(μ (i) ). This scheme suggests that the only connections that link levels are forward connections conveying prediction error to state units and reciprocal backward connections that mediate predictions.

Message Passing and prediction Passage(Asymmetric)

The reciprocal exchange of bottom-up prediction errors and top-down predictions proceeds until prediction error is minimised at all levels and conditional expectations are optimised. This scheme has been invoked to explain many features of early visual responses and provides a plausible account of repetition suppression and mismatch responses in electrophysiology.

Message passing of this sort is consistent with functional asymmetries in real cortical hierarchies, where forward connections (which convey prediction errors) are driving and backwards connections (which model the nonlinear generation of sensory input) have both driving and modulatory characteristics. This asymmetrical message passing is also a characteristic feature of adaptive resonance theory 47,48 , which has formal similarities to predictive coding.

Conclusion

In summary, the theme underlying the Bayesian brain and predictive coding is that the brain is an inference engine that is trying to optimize probabilistic representations of what caused its sensory input. This optimization can be finessed using a (variational free-energy) bound on surprise. In short, the free-energy principle entails the Bayesian brain hypothesis and can be implemented by the many schemes considered in this field. Almost

invariably, these involve some form of message passing or belief propagation among brain areas or units.